One of the biggest lessons I think I’ve learned over the past few years is that you have to be very careful with what you make easy to do in a software system.

When you are working within a preexisting system, it is very hard to work

effectively outside the bounds of that system. Whether you are limited by time constraints, peer pressure, political decisions or just pure technical inertia, those early/legacy decisions in a system will have long reaching impacts on the decisions of those who follow.

I’ll give you an example. On a product I worked on for years, the decision to use an

object relational mapper (ORM) in early stages was based on a desire to eliminate boilerplate code, reduce the learning curve for new developers and generally push the development of new entities down to the entire team rather than specializing this role in one person. All in all the reasoning was sound, but the inability to see some of the psychological aspects that would impact the future had some serious impact on the future of the system.

- Developers stop thinking about database impacts because they never really SEE the database

- The object model and the schema become inexorably tied *

- Accessibility to the DAL ends up being given to junior developers who may not have otherwise dealt with it yet.

- Things that could reasonably be built OUTSIDE the ORM end up dropped in without consideration because developers are following the template.

* This can be avoided by having data contracts that are specific to the DAL There is nothing inherently wrong with ORM, and if your DAL is properly abstracted so that the ORM isn’t propagated throughout the stack then this too isn’t necessarily a problem. I add those two caveats because I really don’t have an issue with ORM, in fact I think used properly it makes way more sense then to waste days of development doing repetitive and simple

CRUD work.

However the deeper issues that arose for us still focused on convenience. It was convenient to expose the friendly querying methods of the domain objects that mapped to our tables directly to the business logic assemblies. It was convenient to let junior developers write code that accessed those objects as if retrieval and persistence were magically

O(1) operations. Of course in reality we discovered embarrassingly late that we had more than a few graphs of objects that were being loaded upon traversal, leading to a separate mapper triggered SELECT for each object and its children. This is the kind of thing that only becomes apparent when you test with large datasets and get off of your local machine and see some real latency.

And yes, in this case, I think you could argue that QA dropped the ball here. But as a professional software developer you really never want to see issues like this get that far.

I’ve picked one example, but there are many many others in the system I’m referring to. Including but not limited to a proliferation of configuration options and files, heavy conceptual reuse of classes and functionality that are only tangentially related to each other, and an increasing reliance on a “simple” mechanism to do work outside the main code base.

Ultimately, this post has less to do with ORM and proper abstraction and more to do with understanding how your current (and future) developers will react to those decisions. I think a conscious effort has to be paid to how a human will game your system. You need to come up with penalties for doing dumb things if possible, and the path of least resistance for the right ones. There are

entire books dedicated to framework and platform development that encompass some of these ideas, but they apply at every level really in my opinion. (except maybe the one man shop?)

LRO does it again, water on the moon! That’s so cool! NASA is important people, we’re laying the foundation for future generations here.

http://www.space.com/scienceastronomy/090923-moon-water-discovery.html

And of course finding water is not the same as finding lakes, but imagine the potential for fuel sources and or human sustenance. Water is damn heavy, and not something we can easily take with us.

Pretty cool stuff.

Saw this on reddit tonight, hanselman has updates his legendary tools list for 2009. So what was going to be an evening of actual coding is slowing turning into an evening of trying out cool new tools that have made his list. (I’m writing this blog post in windows Live Writer after seeing it in the list)

But what’s an hour or two of my time compared to the time that must go into compiling this list? Totally worth checking out, especially if you’ve never had a look before.

It’s nice, my mac envy always takes a slight dip in moments like these.

I remain a huge fan of projects like LRO, and personally still believe that the disbelievers are crackpots but I also have to admit to being a little underwhelmed by the photos listed here on NASA’s site for the Lunar Reconnaissance Orbiter.

http://www.nasa.gov/mission_pages/LRO/multimedia/lroimages/apollosites.html

Still, I’m excited “we’re” (go NASA) going back, and if anything this just really highlights for me how damn big our “little” moon is. Easy to forget that the satellite that’s taking those photos is still 50 kilometers! away from the surface, so somewhat understandable that we’re not getting the close ups I’d love to see.

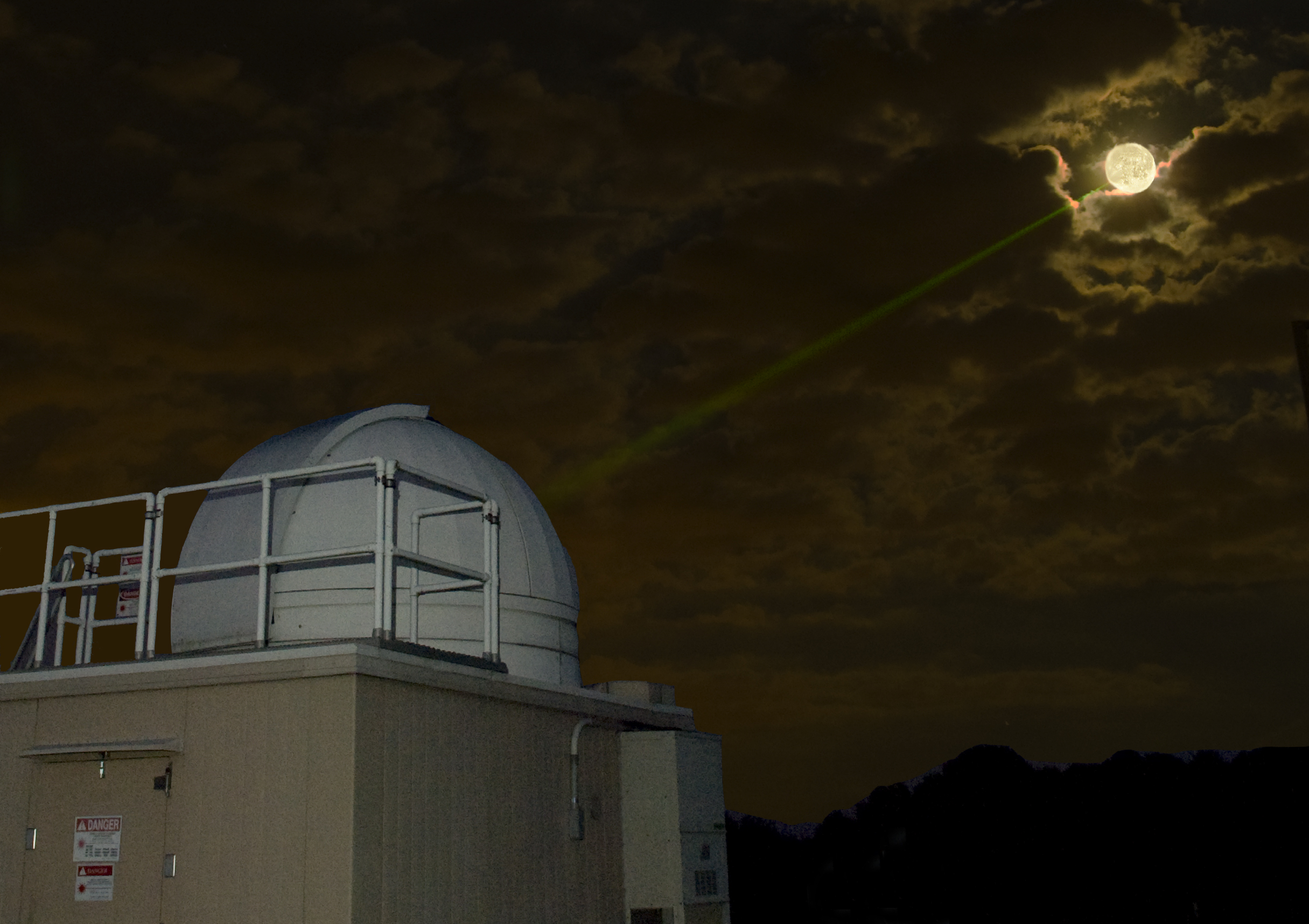

There is a pretty cute video of the LRO launch party here (check side bar) that is worth checking out. The highlight for me was seeing the communications in action, the laser ranging support for the data coming from LRO looks like a large robot with big green blinking eyes.

Congratulations and good luck LRO team, if you can now

just convince Sarah then your mission can surely be called a great success.

I work with someone who has spent a few years working for an online poker company who shall remain nameless. This company was responsible for a poker platform that supported both their own branded poker offering as well as being an engine for other companies who would layer on their branding. My colleague played an important role in taking their fairly well built existing system from thousands of users to tens of thousands of users, and in the process exposing a large handful of very deep bugs, some of which were core design issues.

Looking at this site

http://www.pokerlistings.com/texas-holdem and seeing just this small sample of some of the top poker sites is a bit insane. We’re talking close to 100,000 concurrent players at peak hours JUST from the 16 top “texas hold-em” sites listed here. Who are these people? (I’m totally gonna waste some money online one of these days by the way) I’m sure this is just the tip of the iceberg too.

It’s a great domain for learning critical systems in my opinion. Real time, high concurrency, real money, third party integrations for credits and tournaments and the vast reporting that goes on for all that data being generated.

My colleague’s experience in dealing with real time load and breaking the barriers of scalability are truly fascinating and a good source of learning for me. While I realize

there are bigger puzzles out there, but it’s not every day you have direct access to that experience where you work. In any case, one such learning that I am in the process of trying to apply is a more forward looking approach to load modeling. That is, rather than to simply design and test for scalability; to actually drill down into the

theoretical limit of what you are building in an attempt to

predict failure.

This prediction can mean a lot of different things of course, being on a spectrum with something like a vague statement about being IO bound to much more complicated models of actual transactions and usage to enable extrapolating much richer information about those weak points in the system. In at least one case, my boss has taken this to the point where the model of load was expressed as differential equations prior to any code being written at all. Despite my agile leanings I have to say I’m extremely impressed by that. Definitely something I’d throw on my resume. So I’m simultaneously excited and intimidated at the prospect of delving into our relatively new platform that we’re building in the hopes of producing something similar. I definitely see the value in at least the first few iterations of highlighting weak points and patterns and usage. How far I can go from there will be a big question mark.

For now I’ll be starting at

http://www.tpc.org/information/benchmarks.asp and then moving into as exhaustive list as I can of the riskiest elements of our system. From there I’ll need to prioritize and find the dimensions that will impact our ability to scale. I expect with each there will be natural next steps to removing the barrier (caching, distributing load, eliminating work, etc) and I hope to be able to put a cost next to each of those.

Simple!

Came across this list of “anti-patterns” on wikipedia tonight. I’m tempted just to copy and paste the contents here but that would make me feel dirty.

Definitely a good list though and something worth reminding ourselves of every once in a while when thinking about the systems we build.

http://en.wikipedia.org/wiki/Anti-pattern

This is a great post on how myspace rose and fall and how the same thing applies to Twitter (and I’d imagine Facebook as well) Some really good thoughts. Getting popular before you have your mission can forever trap you into that identity vacuum where popularity is everything.

http://codybrown.name/2009/08/06/myspace-is-to-facebook-as-twitter-is-to-______/

A good read, and the level of blogging I’d like to work towards (more pictures!).

Popular comp-sci essayist and lisp hacker extraordinaire Paul Graham recently posted this article on the difference between a manager’s schedule and a maker’s schedule. This is really inline with my own views on this issue and really sums up a big problem we have where I work with meetings being scheduled with the makers and the impact that has. We’ve had tons of discussions around the cost of mental context switching, but even that’s an understatement of the problem…

Great work, it’s always helpful hearing echoes of these types of thoughts beyond my own everyday sphere. Who knows maybe I can use to add some weight to my arguments.

http://paulgraham.com/makersschedule.html

Ok, so I have to admit that I’ve been one to disregard figures around performance when arguing with co-workers over the merit of managed code vs C/C++. I’ve even used the argument that statically typed languages like Java and C# offer more hints to the compiler that allow for optimizations not possible in unmanaged code. I still have a fairly pragmatic view of the spectrum of cost to deliver (skill set/maintainability) vs performance gains… but regardless of all that….. wow this article completely humbled and inspired me.

I don’t know shit.

http://stellar.mit.edu/S/course/6/fa08/6.197/courseMaterial/topics/topic2/lectureNotes/Intro_and_MxM/Intro_and_MxM.pdf

Around 2003, 2004 I had a bit of a mild obsession with organizing my life into digital form, creating as many mappings as I could from my everyday existence into some kind of digital form. This of course included ideas and thoughts, writings and paintings, music and movies, friends and bits of information about those friends etc. This amass of data have gone from one disjointed medium to another (.txt files in folders, emails, blog posts, napkins) without ever really achieving any chohesiveness or real improvement in my ability to actually synthesize or act on all that information.

At that time the closest tool I found that could come close to mapping ideas and thoughts in a way that made sense to me was “TheBrain” a piece of software intended for visualizing information, and as importantly, the links between that information. As a user, you add “thoughts” to your brain which become nodes in a large graph of ideas, thoughts, attributes, urls etc. You can then very quickly build child, parent and sibling links between those nodes to add more context and information. Those links can then be categorized to add even more context to the relationship between ideas which is often very important information. Unlike a lot of “mind mapping” tools I’ve used though, the brain does not force you into a tree structure. Your thoughts can have multiple parents and siblings or “jumps” to thoughts anywhere else in your brain, whether they are directly related or not. Hyperlinks, imagine that! I think for the actual exercise of brainstorming and free flow thought this is critical. It also mimicks how I visualize my own thoughts working. I’ve since tried personal wiki’s which give you a lot of the same flexibility but lack the very fast keyboard entry for connections and nodes and force you to think at the level “inside” the node, by editing the page. Whereas the brain allows you to think and work at the level of the topology, which is really effective.

I stopped using the software basically because of the overhead of attempting to keep the futile mapping exercise up to date. Imagine if every idea and thought you had needed to be compulsively cataloged manually into a program in order to keep the overall picture intact. It just doesn’t work, at least not for me, and not for long and detracts from my overall goals. I found that every time I opened my brain there was just too much catchup work to do in order to get things synced up and in order.

The other thing that changed for me since 2004 has been search. Between Google and Spotlight on my mac I basically stopped categorizing information in the same ways I used to have to. It becomes less and less necessary to build nested categories of programs or emails or documents etc. Search has exposed the entire hierarchy in a glance, in many cases keeping the context intact that would have derived the categories. Still there is value in the information of that structure, but in a far less visible way.

Well I’ve resurrected the brain and am using it in a new way that seems to be actually working for me. For starters I don’t keep any notes or real content in the brain, this is one of the clumsiest facets of the tool and almost seems like an afterthought. It really is all about the topology, which for me is fine since the bulk of what I need is often in dedicated stores (subversion, sharepoint, team foundation server) I’ve found any attempt to use the clumsy palettes and data entry forms to just be too cumbersome to bother with.

Focusing on the nodes and connections, and learning all the keyboard shortcuts have enabled me to use the brain in a way that is a lot like I sometimes use notepad when I need to brainstorm. Except rather than dashes, asterisks and plus-signs I’m using jumps, parents and children of small snippets of text. It’s really a cool feeling being able to navigate this very large graph of interrelated concerns and ideas with very little effort. Wiki’s and other hypertextual forms have served me well too, but never with so little impediment.

For high level thinking and free form brainstorming this tool is the best I’ve used. And provided I keep it to just the free flowing jumping and navigating it’s incredibly useful.